java之并发编程

挑战

原理

JMM

Unsafe API

Memory Barriers

应用

- Threads and Locks

- Package java.util.concurrent

Challenges

并发编程的挑战:

- 上下文切换的开销

- 线程创建和销毁的开销

- 死锁

- 软硬件资源限制

如何减少上下文切换

- 无锁并发编程:多线程竞争锁时,会引起上下文切换,可以用一些办法来避免使用锁

- CAS算法:java的Atomic包使用CAS算法来更新数据,而不需要加锁。

- 使用最少线程: 避免创建不需要的线程,比如任务很少,但是创建了很多线程来处理,这样会造成大量线程都处于等待状态

- 协程: 在单线程里实现多任务的调度,并在单线程里维持多个任务间的切换

上下文切换的衡量

- 使用Lmbench3可以测量上下文切换的时长。

- 使用vmstat可以测量上下文切换的次数。

死锁

后果:一旦产生死锁,就会造成系统功能不可用.

定位:一旦出现死锁,业务不能继续提供服务了,通过dump线程查看到底是哪个线程出现了问题。

避免死锁:

- 避免一个线程同时获取多个锁。

- 避免一个线程在锁内同时占用多个资源,尽量保证每个锁只占用一个资源。

- 尝试使用定时锁,使用lock.tryLock(timeout)来替代使用内部锁机制。

- 对于数据库锁,加锁和解锁必须在一个数据库连接里,否则会出现解锁失败的情况。

资源限制

什么是资源限制

资源限制是指在进行并发编程时,程序的执行速度受限于计算机硬件资源或软件资源。

例如:网络带宽 硬盘读写速度 CPU处理速度 数据库连接数 socket连接数等

资源限制引发的并发问题

在并发编程中,将代码执行速度加快的原则是将代码中串行执行的部分变成并发执行。

但是如果将某段串行的代码并发执行,因为受限于资源,仍然在串行执行,这时候程序不仅不会加快执行,反而会更慢,因为增加了上下文切换和资源调度的时间。

如何解决资源限制的问题

对于硬件资源限制,可以考虑使用集群并行执行程序。

对于软件资源限制,可以考虑使用资源池复用资源。比如使用连接池将数据库和Socket连接复用,或者在调用对方webservice接口获取数据时,只建立一个连接。

在资源限制情况下进行并发编程

根据不同的资源限制调整程序的并发度

JMM

重排序(reorder)

编译机和java虚拟机通过改变程序的处理顺序来优化程序,在多线程程序里,有时会发生明显由重排序引发的问题。

可见性 (visibility)

当一个线程向一个共享变量写入某个值,这个值对另一个线程是否可见

共享内存(shared memory)

共享内存是所有线程共享的存储空间,也就是堆内存。在JMM中,只有可以被多个线程访问的共享内存才会出问题。

Memory that can be shared between threads is called shared memory or heap memory.

所有实例域、静态域和数组元素都存储在堆内存,线程间共享。

局部变量,方法参数和异常处理器参数不会在线程之间共享,没有内存可见性问题,不受内存模型影响。

synchronized关键字

synchronized具有“线程的互斥处理”和“同步处理”的两种功能

互斥: 线程在synchronized开始时获取锁(lock),在synchronized终止时释放锁(unlock)

同步: JMM保证某个线程在进行unlock操作前进行的所有写入操作对进行lock操作的线程都是可见的

volatile关键字

volatile具有“同步处理”和”对long和double原子处理”的两种功能

同步: 某个线程对volatile字段进行的写操作的结果对其他线程立即可见

原子: java规范无法确保对long和double的赋值操作的原子性,如果long和double的字段是volatile字段,可以确保赋值操作的原子性

向volatile字段写入的值如果对线程B可见,那么之前写入的所有值就都是可见的。

volatile不会进行线程的互斥处理。

happens-before

JMM通过happens-before关系向程序员提供跨线程的内存可见性保证。

例如,A线程的写操作a与B线程的读操作b之间存在happensbefore关系,尽管a操作和b操作在不同的线程中执行,但JMM向程序员保证a操作将对b操作可见。

Each action in a thread happens before every subsequent action in that thread.

An unlock on a monitor happens before every subsequent lock on that monitor.

A write to a volatile field happens before every subsequent read of that volatile.

A call to start() on a thread happens before any actions in the started thread.

All actions in a thread happen before any other thread successfully returns from a join() on that thread.

If an action a happens before an action b, and b happens before an action c, then a happens before c.

final字段

JMM可以确保构造函数处理结束时final字段的值被正确初始化,对其他线程是可见的. (不要从构造函数中泄露this)

Double-Checked Locking

The “Double-Checked Locking is Broken” Declaration

基于volatile的解决方案

基于final和构造函数的解决方案

Initialization On Demand Holder (原因: 类的初始化时线程安全的)

操作总结

JMM定义的操作,归纳起来主要有:

- normal read 操作

- normal write 操作

- volatile read 操作

- volatile write 操作

- lock 操作

- unlock 操作

- 线程启动操作

- 线程终止操作

- 线程启动后的第一个操作

- 线程终止前的最后一个操作

Reference

Threads and Locks, Thread Model

java并发编程的艺术:第3章

Unsafe API

Method Categories

| Category | Mehtod | Desc |

|---|---|---|

| Info | addressSize pageSize |

Just returns some low-level memory information. |

| Objects | allocateInstance objectFieldOffset |

Provides methods for object and its fields manipulation. |

| Classes | staticFieldOffsetdefineClassdefineAnonymousClassensureClassInitialized |

Provides methods for classes and static fields manipulation |

| Arrays | arrayBaseOffsetarrayIndexScale |

Arrays manipulation |

| Memory | allocateMemorycopyMemory freeMemory reallocateMemorygetAddress getIntputInt getLong putLong getObject putObject |

Direct memory access methods |

| CAS | compareAndSwapInt compareAndSwapLong compareAndSwapObject |

Compare And Swap |

| Synchronization | park unpark |

Low level primitives for synchronization. |

| Synchronization | monitorEnter tryMonitorEntermonitorExit |

Low level primitives for synchronization. |

| Synchronization | getIntVolatile putIntVolatile getLongVolatile putLongVolatile |

Low level primitives for synchronization. |

| Synchronization | putOrderedIntputOrderedLongputOrderedObject |

Low level primitives for synchronization. |

Method Details

1 | package sun.misc; |

Reference

Memory Barriers

Memory barrier instructions directly control only the interaction of a CPU with its cache, with its write-buffer that holds stores waiting to be flushed to memory, and/or its buffer of waiting loads or speculatively executed instructions. These effects may lead to further interaction among caches, main memory and other processors.

Reorderings

| Can Reorder | 2nd operation | 2nd operation | 2nd operation |

|---|---|---|---|

| 1st operation | Normal Load Normal Store |

Volatile Load MonitorEnter |

Volatile Store MonitorExit |

| Normal Load Normal Store |

No | ||

| Volatile Load MonitorEnter |

No | No | No |

| Volatile store MonitorExit |

No | No |

Where:

- Normal Loads are getfield, getstatic, array load of non-volatile fields.

- Normal Stores are putfield, putstatic, array store of non-volatile fields

- Volatile Loads are getfield, getstatic of volatile fields that are accessible by multiple threads

- Volatile Stores are putfield, putstatic of volatile fields that are accessible by multiple threads

- MonitorEnters (including entry to synchronized methods) are for lock objects accessible by multiple threads.

- MonitorExits (including exit from synchronized methods) are for lock objects accessible by multiple threads.

Final Fields

Loads and Stores of final fields act as “normal” accesses with respect to locks and volatiles, but impose two additional reordering rules:

- A store of a final field (inside a constructor) and, if the field is a reference, any store that this final can reference, cannot be reordered with a subsequent store (outside that constructor) of the reference to the object holding that field into a variable accessible to other threads.

- The initial load (i.e., the very first encounter by a thread) of a final field cannot be reordered with the initial load of the reference to the object containing the final field.

These rules imply that reliable use of final fields by Java programmers requires that the load of a shared reference to an object with a final field itself be synchronized, volatile, or final, or derived from such a load, thus ultimately ordering the initializing stores in constructors with subsequent uses outside constructors.

Barriers Categories

| Barriers | Sequence | Desc | |

|---|---|---|---|

| LoadLoad | Load1; LoadLoad; Load2 |

ensures that Load1’s data are loaded before data accessed by Load2 and all subsequent load instructions are loaded. In general, explicit LoadLoad barriers are needed on processors that perform speculative loads and/or out-of-order processing in which waiting load instructions can bypass waiting stores. On processors that guarantee to always preserve load ordering, the barriers amount to no-ops. | |

| StoreStore | Store1; StoreStore; Store2 |

ensures that Store1’s data are visible to other processors (i.e., flushed to memory) before the data associated with Store2 and all subsequent store instructions. In general, StoreStore barriers are needed on processors that do not otherwise guarantee strict ordering of flushes from write buffers and/or caches to other processors or main memory. | |

| LoadStore | Load1; LoadStore; Store2 |

ensures that Load1’s data are loaded before all data associated with Store2 and subsequent store instructions are flushed. LoadStore barriers are needed only on those out-of-order procesors in which waiting store instructions can bypass loads. | |

| StoreLoad | Store1; StoreLoad; Load2 |

ensures that Store1’s data are made visible to other processors (i.e., flushed to main memory) before data accessed by Load2 and all subsequent load instructions are loaded. StoreLoad barriers protect against a subsequent load incorrectly using Store1’s data value rather than that from a more recent store to the same location performed by a different processor. Because of this, on the processors discussed below, a StoreLoad is strictly necessary only for separating stores from subsequent loads of the same location(s) as were stored before the barrier. StoreLoad barriers are needed on nearly all recent multiprocessors, and are usually the most expensive kind. Part of the reason they are expensive is that they must disable mechanisms that ordinarily bypass cache to satisfy loads from write-buffers. This might be implemented by letting the buffer fully flush, among other possible stalls. |

The following table shows how these barriers correspond to JSR-133 ordering rules.

| Required barriers | 2nd operation | 2nd operation | 2nd operation | 2nd operation |

|---|---|---|---|---|

| 1st operation | Normal Load | Normal Store | Volatile Load MonitorEnter |

Volatile Store MonitorExit |

| Normal Load | LoadStore | |||

| Normal Store | StoreStore | |||

| Volatile Load MonitorEnter |

LoadLoad | LoadStore | LoadLoad | LoadStore |

| Volatile Store MonitorExit |

StoreLoad | StoreStore |

Interactions with Atomic Instructions

| Required Barriers | 2nd operation | |||||

|---|---|---|---|---|---|---|

| 1st operation | Normal Load | Normal Store | Volatile Load | Volatile Store | MonitorEnter | MonitorExit |

| Normal Load | LoadStore | LoadStore | ||||

| Normal Store | StoreStore | StoreExit | ||||

| Volatile Load | LoadLoad | LoadStore | LoadLoad | LoadStore | LoadEnter | LoadExit |

| Volatile Store | StoreLoad | StoreStore | StoreEnter | StoreExit | ||

| MonitorEnter | EnterLoad | EnterStore | EnterLoad | EnterStore | EnterEnter | EnterExit |

| MonitorExit | ExitLoad | ExitStore | ExitEnter | ExitExit |

In this table, “Enter” is the same as “Load” and “Exit” is the same as “Store”, unless overridden by the use and nature of atomic instructions. In particular:

- EnterLoad is needed on entry to any synchronized block/method that performs a load. It is the same as LoadLoad unless an atomic instruction is used in MonitorEnter and itself provides a barrier with at least the properties of LoadLoad, in which case it is a no-op.

- StoreExit is needed on exit of any synchronized block/method that performs a store. It is the same as StoreStore unless an atomic instruction is used in MonitorExit and itself provides a barrier with at least the properties of StoreStore, in which case it is a no-op.

- ExitEnter is the same as StoreLoad unless atomic instructions are used in MonitorExit and/or MonitorEnter and at least one of these provide a barrier with at least the properties of StoreLoad, in which case it is a no-op.

Recipes

- Issue a StoreStore barrier before each volatile store.

(On ia64 you must instead fold this and most barriers into corresponding load or store instructions.)- Issue a StoreStore barrier after all stores but before return from any constructor for any class with a final field.

- Issue a StoreLoad barrier after each volatile store.

Note that you could instead issue one before each volatile load, but this would be slower for typical programs using volatiles in which reads greatly outnumber writes. Alternatively, if available, you can implement volatile store as an atomic instruction (for example XCHG on x86) and omit the barrier. This may be more efficient if atomic instructions are cheaper than StoreLoad barriers.- Issue LoadLoad and LoadStore barriers after each volatile load.

On processors that preserve data dependent ordering, you need not issue a barrier if the next access instruction is dependent on the value of the load. In particular, you do not need a barrier after a load of a volatile reference if the subsequent instruction is a null-check or load of a field of that reference.- Issue an ExitEnter barrier either before each MonitorEnter or after each MonitorExit.

(As discussed above, ExitEnter is a no-op if either MonitorExit or MonitorEnter uses an atomic instruction that supplies the equivalent of a StoreLoad barrier. Similarly for others involving Enter and Exit in the remaining steps.)- Issue EnterLoad and EnterStore barriers after each MonitorEnter.

- Issue StoreExit and LoadExit barriers before each MonitorExit.

- If on a processor that does not intrinsically provide ordering on indirect loads, issue a LoadLoad barrier before each load of a final field.

removing redundant barriers. The above tables indicate that barriers can be eliminated as follows:

| Original | => | Transformed | ||||

|---|---|---|---|---|---|---|

| 1st | ops | 2nd | => | 1st | ops | 2nd |

| LoadLoad | [no loads] | LoadLoad | => | [no loads] | LoadLoad | |

| LoadLoad | [no loads] | StoreLoad | => | [no loads] | StoreLoad | |

| StoreStore | [no stores] | StoreStore | => | [no stores] | StoreStore | |

| StoreStore | [no stores] | StoreLoad | => | [no stores] | StoreLoad | |

| StoreLoad | [no loads] | LoadLoad | => | StoreLoad | [no loads] | |

| StoreLoad | [no stores] | StoreStore | => | StoreLoad | [no stores] | |

| StoreLoad | [no volatile loads] | StoreLoad | => | [no volatile loads] | StoreLoad |

Reference

The JSR-133 Cookbook for Compiler Writers

JSR 133 (Java Memory Model) FAQ内存屏障

what-does-a-loadload-barrier-really-doWhat is difference between getXXXVolatile vs getXXX in java unsafe?Atomic*.lazySet

AtomicInteger lazySet vs set

Atomic lazySet

What do Atomic::lazySet/Atomic FieldUpdater::lazySet/Unsafe::putOrdered actually mean?

Add lazySet methods to atomic classesUNSAFE.putOrderedLong:

a Store/Store barrier between this write and any previous store.UNSAFE.putLongVolatile:

Store/Store barrier between this write and any previous write , and a Store/Load barrier between this write and any subsequent volatile read.

Threads Basics

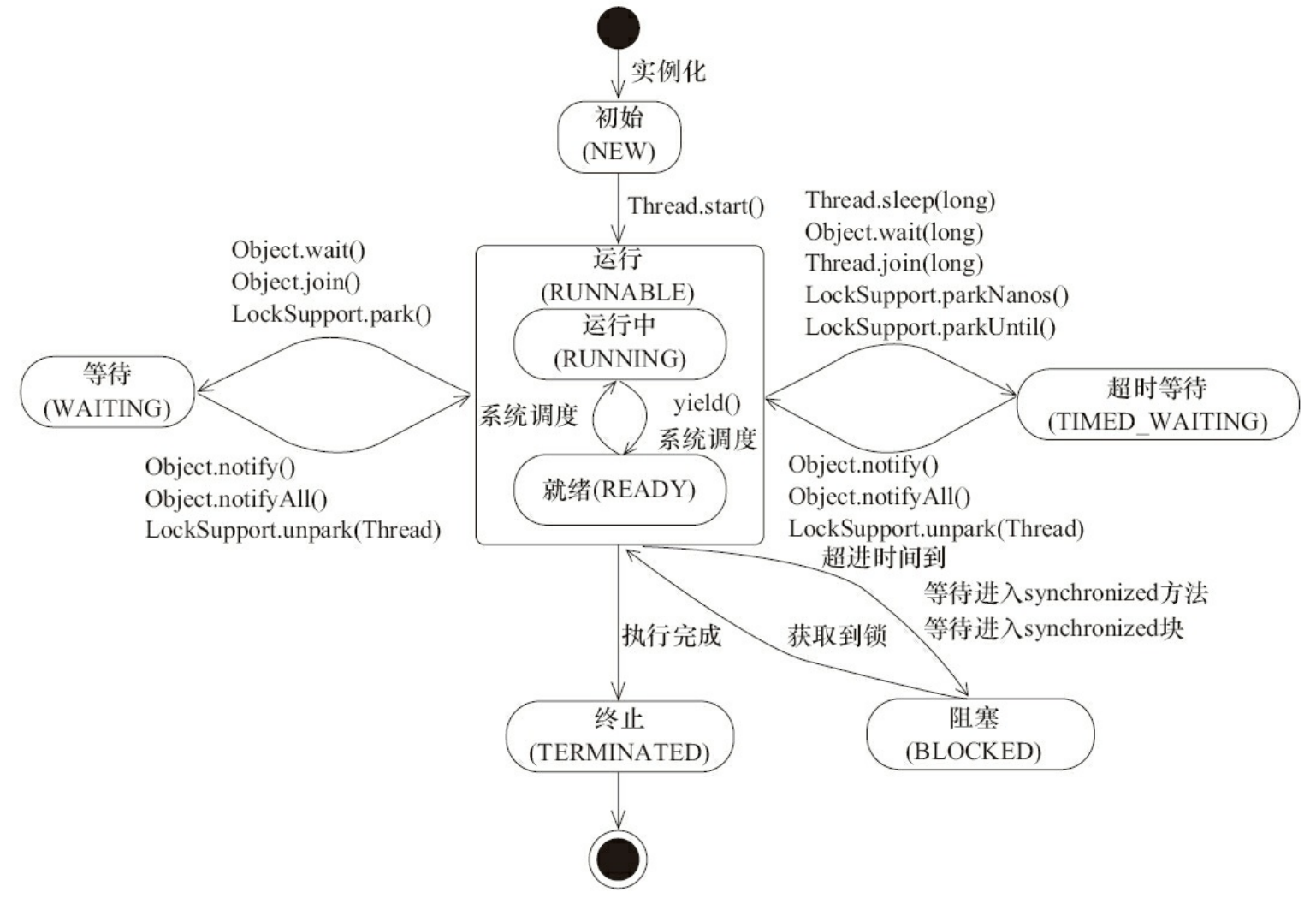

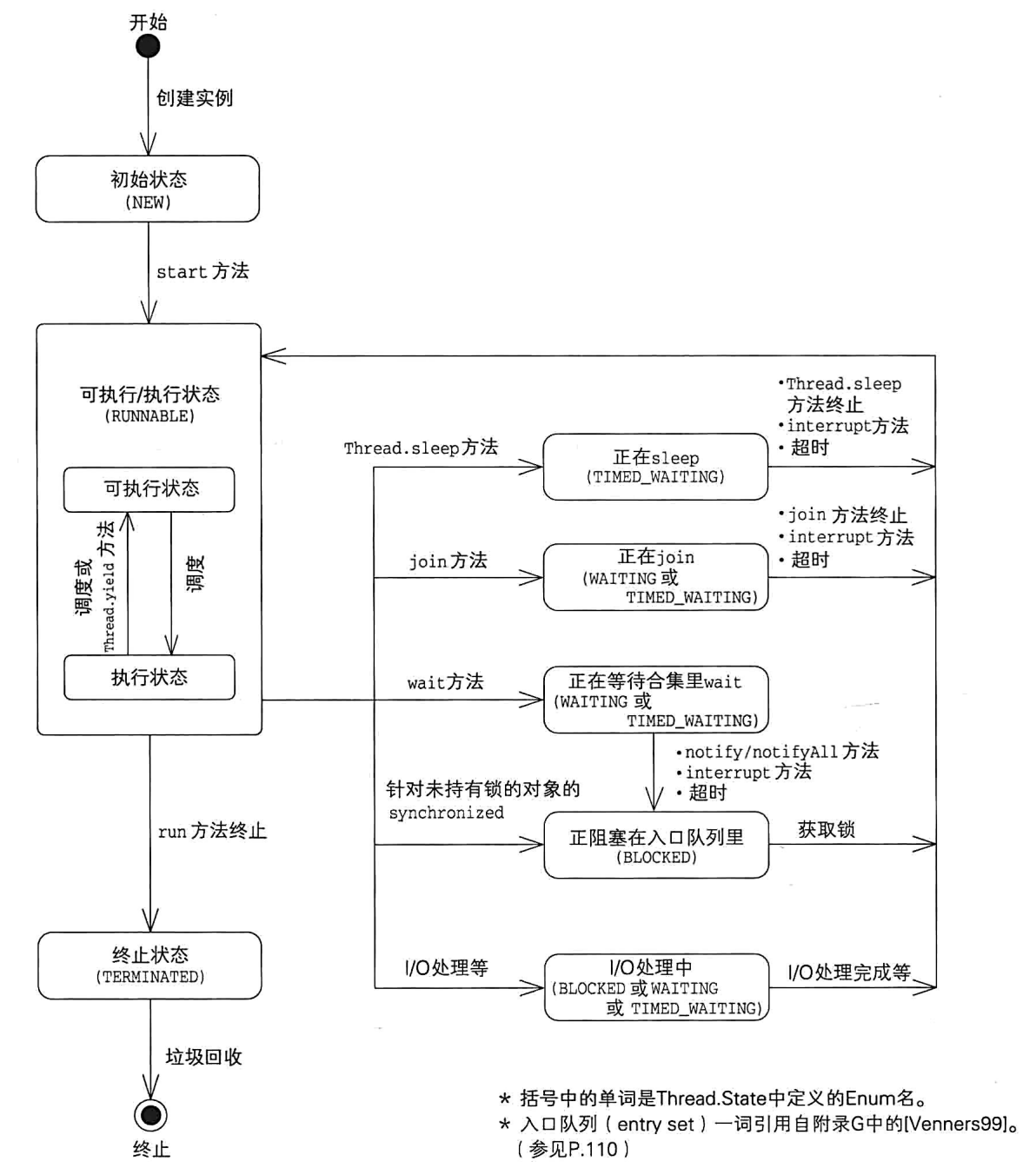

线程状态

| 状态 | 描述 |

|---|---|

| NEW | 初始状态,线程被构建还没有调用start()方法 |

| RUNNABLE | 运行状态,java线程将OS中的就绪和运行两种状态笼统地称作运行中 |

| BLOCKED | 阻塞状态,表示线程阻塞于锁 |

| WAITING | 等待状态,表示线程进入等待状态,进入该状态表示当前线程需要等待其他线程做出一些特定动作(通知或中断) |

| TIME_WAITING | 超时等待状态,该状态不同于WAITING,是可以在指定的时间自行返回的 |

| TERMINATED | 终止状态,表示当前线程已经执行完毕 |

线程相关的方法和线程状态迁移

java.lang.Object

| METHOD | Description |

|---|---|

notify() |

Wakes up a single thread that is waiting on this object’s monitor. |

notifyAll() |

Wakes up all threads that are waiting on this object’s monitor. |

wait() |

Causes the current thread to wait until another thread invokes the notify()method or the notifyAll()method for this object. |

wait(long timeout) |

Causes the current thread to wait until either another thread invokes the notify()method or the notifyAll() method for this object, or a specified amount of time has elapsed. |

wait(long timeout, int nanos) |

Causes the current thread to wait until another thread invokes the notify() method or the notifyAll() method for this object, or some other thread interrupts the current thread, or a certain amount of real time has elapsed. |

nogify()

Wakes up a single thread that is waiting on this object’s monitor.

This method should only be called by a thread that is the owner of this object’s monitor. A thread becomes the owner of the object’s monitor in one of three ways:

- By executing a synchronized instance method of that object.

- By executing the body of a

synchronizedstatement that synchronizes on the object.- For objects of type

Class,by executing a synchronized static method of that class.Only one thread at a time can own an object’s monitor.

notifyAll()

This method should only be called by a thread that is the owner of this object’s monitor.

A thread becomes the owner of the object’s monitor in one of three ways:

- By executing a synchronized instance method of that object.

- By executing the body of a

synchronizedstatement that synchronizes on the object.- For objects of type

Class,by executing a synchronized static method of that class.Only one thread at a time can own an object’s monitor.

wait()

Causes the current thread to wait until another thread invokes the

notify()method or thenotifyAll()method for this object.The current thread must own this object’s monitor.

The thread releases ownership of this monitor and waits until another thread notifies threads waiting on this object’s monitor to wake up either through a call to the

notifymethod or thenotifyAllmethod.

wait(long timeout)

Causes the current thread to wait until either another thread invokes the

notify()method or thenotifyAll()method for this object, or a specified amount of time has elapsed.The current thread must own this object’s monitor.

This method causes the current thread (call it T) to place itself in the wait set for this object and then to relinquish any and all synchronization claims on this object. Thread T becomes disabled for thread scheduling purposes and lies dormant until one of four things happens:

- Some other thread invokes the

notifymethod for this object and thread T happens to be arbitrarily chosen as the thread to be awakened.- Some other thread invokes the

notifyAllmethod for this object.- Some other thread interrupts thread T.

- The specified amount of real time has elapsed, more or less. If

timeoutis zero, however, then real time is not taken into consideration and the thread simply waits until notified.The thread T is then removed from the wait set for this object and re-enabled for thread scheduling.

A thread can also wake up without being notified, interrupted, or timing out, a so-called spurious wakeup. While this will rarely occur in practice, applications must guard against it by testing for the condition that should have caused the thread to be awakened, and continuing to wait if the condition is not satisfied. In other words, waits should always occur in loops, like this one:

2

3

4

5

while (<condition does not hold>)

obj.wait(timeout);

... // Perform action appropriate to condition

}

wait(long timeout, int nanos)

This method is similar to the

waitmethod of one argument, but it allows finer control over the amount of time to wait for a notification before giving up.

线程状态迁移

java.lang.Thread

methods

1 | // Returns this thread's priority. |

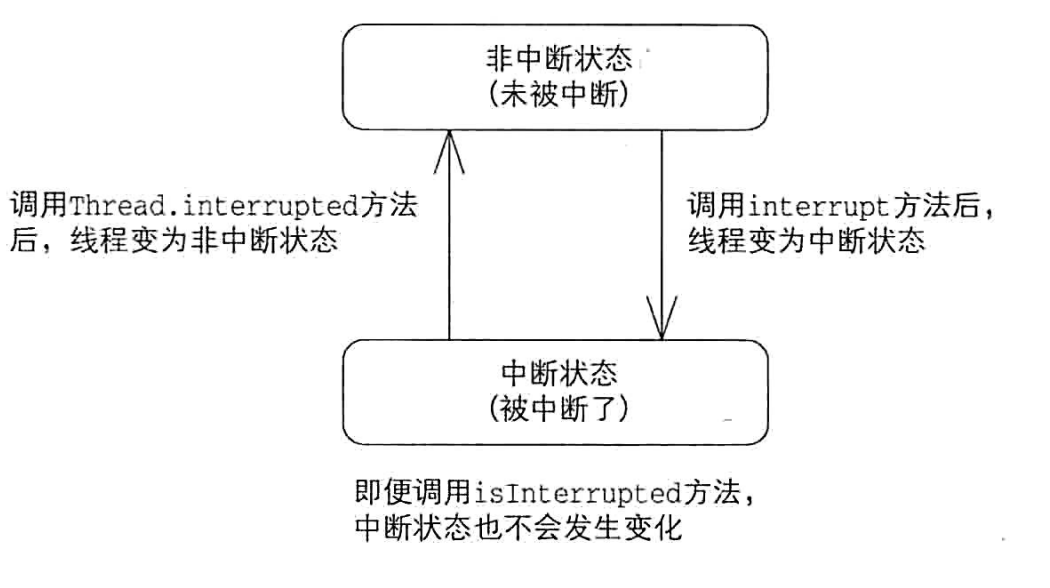

中断

void interrupt()方法只是改变中断状态,boolean isInterrutped()是Thread类的实例方法,用于检查指定线程的中断状态,并不会改变中断状态。static boolean interrupted()是Thread类的静态方法,用于检查并清除当前线程的中断状态。只有这个方法才可以清除中断状态,操作的是当前线程,该方法并不能用于清除其他线程的中断状态。

void interrupt()

Interrupts this thread.

If this thread is blocked in an invocation of the

wait(),wait(long), orwait(long, int)methods of theObjectclass, or of thejoin(),join(long),join(long, int),sleep(long), orsleep(long, int), methods of this class, then its interrupt status will be cleared and it will receive anInterruptedException.If this thread is blocked in an I/O operation upon an

InterruptibleChannelthen the channel will be closed, the thread’s interrupt status will be set, and the thread will receive aClosedByInterruptException.If this thread is blocked in a

Selectorthen the thread’s interrupt status will be set and it will return immediately from the selection operation, possibly with a non-zero value, just as if the selector’swakeupmethod were invoked.If none of the previous conditions hold then this thread’s interrupt status will be set.

Interrupting a thread that is not alive need not have any effect.

如果没有调用sleep 、wait、join等方法,或者没有编写检查线程的中断状态并抛出InterruptedException异常的代码,那么InterruptedException异常就不会被抛出

InterruptedException

Thrown when a thread is waiting, sleeping, or otherwise occupied, and the thread is interrupted, either before or during the activity. Occasionally a method may wish to test whether the current thread has been interrupted, and if so, to immediately throw this exception. The following code can be used to achieve this effect:

2

3

throw new InterruptedException();

Reference

Chapter 17. Threads and Locks

Thread Document

Object Document

Runnable Document

ThreadLocal Document

Concurrent Package

| package | reference |

|---|---|

| java.util.concurrent | package tree package summary |

| java.util.concurrent.locks | package tree package summary |

| java.util.concurrent.atomic | package tree package summary |

1 | http://www.cs.rochester.edu/research/synchronization/pseudocode/duals.html |

AQS

构建并发包工具的基本框架

JDK中的AbstractQueuedSynchronizer源码

AbstractQueuedSynchronizer Document

usual sync tools

| Class | Desc |

|---|---|

| Semaphore | A counting semaphore. |

| CountDownLatch | A synchronization aid that allows one or more threads to wait until a set of operations being performed in other threads completes. |

| CyclicBarrier | A synchronization aid that allows a set of threads to all wait for each other to reach a common barrier point. |

| Exchanger |

A synchronization point at which threads can pair and swap elements within pairs. |

| Phaser | A reusable synchronization barrier, similar in functionality to CyclicBarrier and CountDownLatch but supporting more flexible usage. |

queue

| Class | Desc |

|---|---|

| BlockingQueue |

A Queue that additionally supports operations that wait for the queue to become non-empty when retrieving an element, and wait for space to become available in the queue when storing an element. |

| ArrayBlockingQueue |

A bounded blocking queue backed by an array. |

| LinkedBlockingQueue |

An optionally-bounded blocking queue based on linked nodes. |

| PriorityBlockingQueue |

An unbounded blocking queue that uses the same ordering rules as class PriorityQueue and supplies blocking retrieval operations. |

| DelayQueue<E extends Delayed> | An unbounded blocking queue of Delayed elements, in which an element can only be taken when its delay has expired. |

| SynchronousQueue |

A blocking queue in which each insert operation must wait for a corresponding remove operation by another thread, and vice versa. |

| ConcurrentLinkedQueue |

An unbounded thread-safe queue based on linked nodes. |

| TransferQueue |

A BlockingQueue in which producers may wait for consumers to receive elements. |

| TransferQueue |

A BlockingQueue in which producers may wait for consumers to receive elements. |

map

| Class | Desc |

|---|---|

| ConcurrentMap<K,V> | A Map providing thread safety and atomicity guarantees. |

| ConcurrentHashMap<K,V> | A hash table supporting full concurrency of retrievals and high expected concurrency for updates. |

| ConcurrentSkipListMap<K,V> | A scalable concurrent ConcurrentNavigableMap implementation. |

| ConcurrentNavigableMap<K,V> | A ConcurrentMap supporting NavigableMap operations, and recursively so for its navigable sub-maps. |

copy on write

| Class | Desc |

|---|---|

| CopyOnWriteArrayList |

A thread-safe variant of ArrayList in which all mutative operations (add, set, and so on) are implemented by making a fresh copy of the underlying array. |

| CopyOnWriteArraySet |

A Set that uses an internal CopyOnWriteArrayList for all of its operations. |

locks

| Class | Desc |

|---|---|

| Condition | Condition factors out the Object monitor methods (wait, notify and notifyAll) into distinct objects to give the effect of having multiple wait-sets per object, by combining them with the use of arbitrary Lock implementations. |

| Lock | Lock implementations provide more extensive locking operations than can be obtained using synchronized methods and statements. |

| ReadWriteLock | A ReadWriteLock maintains a pair of associated locks, one for read-only operations and one for writing. |

| AbstractOwnableSynchronizer | A synchronizer that may be exclusively owned by a thread. |

| AbstractQueuedLongSynchronizer | A version of AbstractQueuedSynchronizer in which synchronization state is maintained as a long. |

| AbstractQueuedSynchronizer | Provides a framework for implementing blocking locks and related synchronizers (semaphores, events, etc) that rely on first-in-first-out (FIFO) wait queues. |

| LockSupport | Basic thread blocking primitives for creating locks and other synchronization classes. |

| ReentrantLock | A reentrant mutual exclusion Lock with the same basic behavior and semantics as the implicit monitor lock accessed using synchronized methods and statements, but with extended capabilities. |

| ReentrantReadWriteLock | An implementation of ReadWriteLock supporting similar semantics to ReentrantLock. |

| ReentrantReadWriteLock.ReadLock | The lock returned by method ReentrantReadWriteLock.readLock(). |

| ReentrantReadWriteLock.WriteLock | The lock returned by method ReentrantReadWriteLock.writeLock(). |

| StampedLock | A capability-based lock with three modes for controlling read/write access. |

atomic

| Class | Desc |

|---|---|

| AtomicBoolean | A boolean value that may be updated atomically. |

| AtomicInteger | An int value that may be updated atomically. |

| AtomicIntegerArray | An int array in which elements may be updated atomically. |

| AtomicIntegerFieldUpdater |

A reflection-based utility that enables atomic updates to designated volatile int fields of designated classes. |

| AtomicLong | A long value that may be updated atomically. |

| AtomicLongArray | A long array in which elements may be updated atomically. |

| AtomicLongFieldUpdater |

A reflection-based utility that enables atomic updates to designated volatile long fields of designated classes. |

| AtomicMarkableReference |

An AtomicMarkableReference maintains an object reference along with a mark bit, that can be updated atomically. |

| AtomicReference |

An object reference that may be updated atomically. |

| AtomicReferenceArray |

An array of object references in which elements may be updated atomically. |

| AtomicReferenceFieldUpdater<T,V> | A reflection-based utility that enables atomic updates to designated volatile reference fields of designated classes. |

| AtomicStampedReference |

An AtomicStampedReference maintains an object reference along with an integer “stamp”, that can be updated atomically. |

| DoubleAccumulator | One or more variables that together maintain a running double value updated using a supplied function. |

| DoubleAdder | One or more variables that together maintain an initially zero double sum. |

| LongAccumulator | One or more variables that together maintain a running long value updated using a supplied function. |

| LongAdder | One or more variables that together maintain an initially zero long sum. |

task

| class | Desc |

|---|---|

| java.lang.Runnable | |

| Callable |

A task that returns a result and may throw an exception. |

| Delayed | A mix-in style interface for marking objects that should be acted upon after a given delay. |

future && futureTask

| Class | Desc |

|---|---|

| CompletionStage |

A stage of a possibly asynchronous computation, that performs an action or computes a value when another CompletionStage completes. |

| Future |

A Future represents the result of an asynchronous computation. |

| RunnableFuture |

A Future that is Runnable. |

| RunnableScheduledFuture |

A ScheduledFuture that is Runnable. |

| ScheduledFuture |

A delayed result-bearing action that can be cancelled. |

| FutureTask |

A cancellable asynchronous computation. |

| CompletableFuture |

A Future that may be explicitly completed (setting its value and status), and may be used as a CompletionStage, supporting dependent functions and actions that trigger upon its completion. |

| CompletionService |

A service that decouples the production of new asynchronous tasks from the consumption of the results of completed tasks. |

| CompletableFuture.AsynchronousCompletionTask | A marker interface identifying asynchronous tasks produced by async methods. |

| ExecutorCompletionService |

A CompletionService that uses a supplied Executor to execute tasks. |

executor

1 | 线程池的使用 |

| Class | Desc |

|---|---|

| ThreadFactory | An object that creates new threads on demand. |

| Executors | Factory and utility methods for Executor, ExecutorService, ScheduledExecutorService, ThreadFactory, and Callable classes defined in this package. |

| Executor | An object that executes submitted Runnable tasks. |

| ExecutorService | An Executor that provides methods to manage termination and methods that can produce a Future for tracking progress of one or more asynchronous tasks. |

| AbstractExecutorService | Provides default implementations of ExecutorService execution methods. |

| ScheduledExecutorService | An ExecutorService that can schedule commands to run after a given delay, or to execute periodically. |

| ThreadPoolExecutor | An ExecutorService that executes each submitted task using one of possibly several pooled threads, normally configured using Executors factory methods. |

| ScheduledThreadPoolExecutor | A ThreadPoolExecutor that can additionally schedule commands to run after a given delay, or to execute periodically. |

| RejectedExecutionHandler | A handler for tasks that cannot be executed by a ThreadPoolExecutor. |

| ThreadPoolExecutor.AbortPolicy | A handler for rejected tasks that throws a RejectedExecutionException. |

| ThreadPoolExecutor.CallerRunsPolicy | A handler for rejected tasks that runs the rejected task directly in the calling thread of the execute method, unless the executor has been shut down, in which case the task is discarded. |

| ThreadPoolExecutor.DiscardOldestPolicy | A handler for rejected tasks that discards the oldest unhandled request and then retries execute, unless the executor is shut down, in which case the task is discarded. |

| ThreadPoolExecutor.DiscardPolicy | A handler for rejected tasks that silently discards the rejected task. |

1 | 阅读JDK-ThreadPoolExecutor注释: |

ForkJoin

| Class | Desc |

|---|---|

| ForkJoinPool | An ExecutorService for running ForkJoinTasks. |

| ForkJoinTask |

Abstract base class for tasks that run within a ForkJoinPool. |

| ForkJoinWorkerThread | A thread managed by a ForkJoinPool, which executes ForkJoinTasks. |

| ForkJoinPool.ForkJoinWorkerThreadFactory | Factory for creating new ForkJoinWorkerThreads. |

| ForkJoinPool.ManagedBlocker | Interface for extending managed parallelism for tasks running in ForkJoinPools. |

| CountedCompleter |

A ForkJoinTask with a completion action performed when triggered and there are no remaining pending actions. |

deque and others

| Class | Desc |

|---|---|

| BlockingDeque |

A Deque that additionally supports blocking operations that wait for the deque to become non-empty when retrieving an element, and wait for space to become available in the deque when storing an element. |

| LinkedBlockingDeque |

An optionally-bounded blocking deque based on linked nodes. |

| ConcurrentLinkedDeque |

An unbounded concurrent deque based on linked nodes. |

| ConcurrentSkipListSet |

A scalable concurrent NavigableSet implementation based on a ConcurrentSkipListMap. |

| ThreadLocalRandom | A random number generator isolated to the current thread. |

| TimeUnit | A TimeUnit represents time durations at a given unit of granularity and provides utility methods to convert across units, and to perform timing and delay operations in these units |

ThreadMXBean

ThreadMXBean && java.lang.management.ThreadInfo

Some Tips

1 | Disruptor的多线程 |

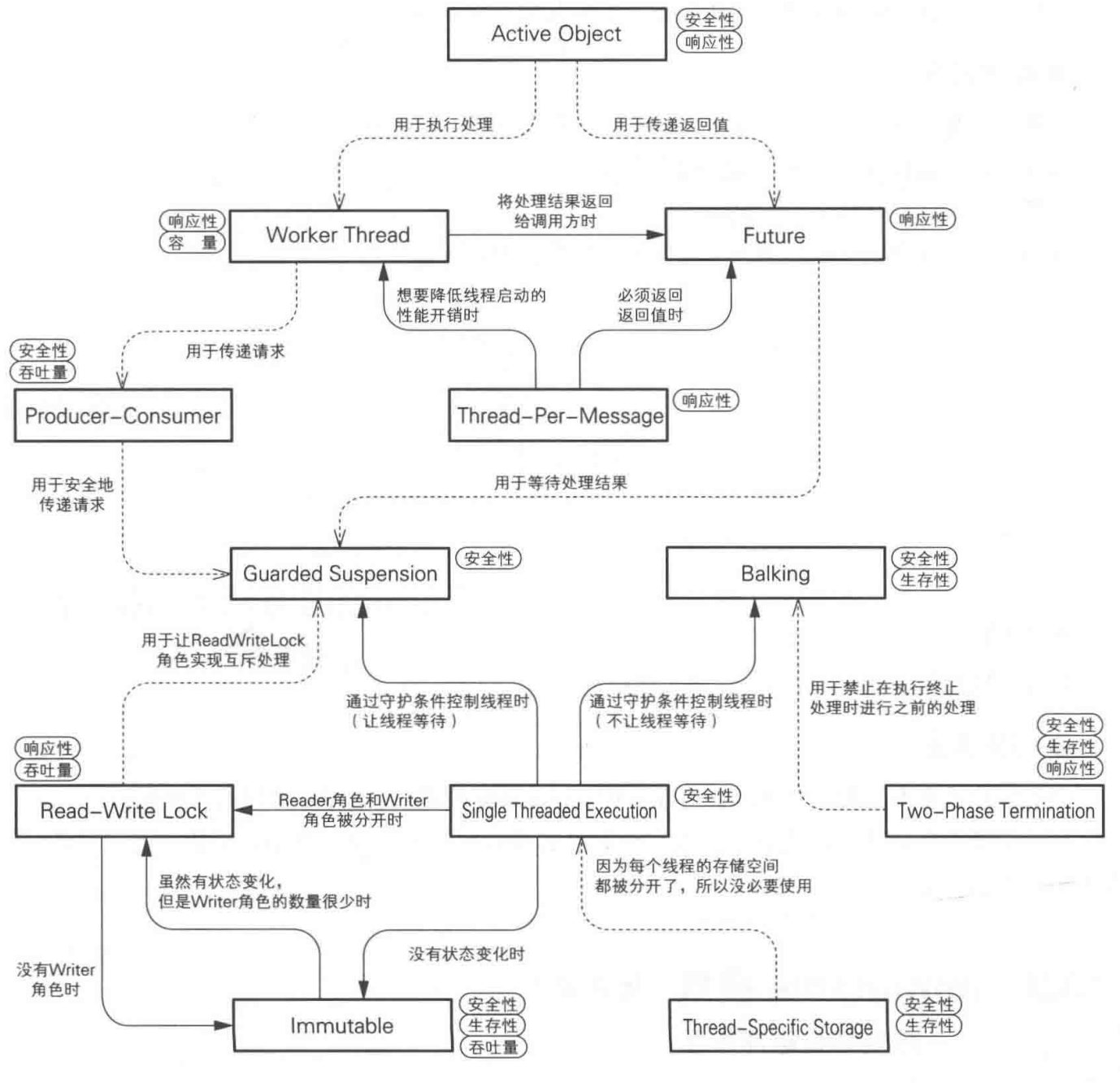

Pattern

| Pattern | 语境 | 问题 |

|---|---|---|

| Single Threaded Execution | 多个线程共享实例时 | 如果各个线程随时地改变实例状态,实例会失去安全性 |

| Immutable | 多个线程共享实例,但是实例的状态不会发生变化 | 如果使用Single Threaded Execution,吞吐量会下降 |

| Guarded Suspension | 多个线程共享实例时 | 如果各个线程都随意地访问实例,实例会失去安全性 |

| Balking | ||

| Producer-Consumer | ||

| Read-Write Lock | ||

| Thread-Per-Message | ||

| Worker Pool | ||

| Future | ||

| Two-Phase Termination | ||

| Thread-Specific Storage | ||

| Active Object | ||

| Pattern | 解决方案 | 实现 | ||

|---|---|---|---|---|

| Single Threaded Execution | 严格规定临界区,确保临界区只能被一个线程执行 | 可以使用synchronized来实现 | ||

| Immutable | 为了防止不小心写出改变实例状态的代码,请修改代码,让线程无法改变表示实例状态的字段。 | 可以使用private隐藏字段,还可以使用final来确保字段无法改变 | ||

| Guarded Suspension | ||||

| Balking | ||||

| Producer-Consumer | ||||

| Read-Write Lock | ||||

| Thread-Per-Message | ||||

| Worker Pool | ||||

| Future | ||||

| Two-Phase Termination | ||||

| Thread-Specific Storage | ||||

| Active Object |